As of this last weekend, I’ve largely finished bringing over changes from my standalone synth tool into the game. It’s not totally done yet; there’s still a lot of optimization left to be done, but it’s stable enough that I can move on to other tasks for a time.

Porting diffs between these two environments is always a little bit more involved than I expect due to some considerations necessary for supporting a latency-free gameplay experience. The standalone tool only plays one “tune” at a time. A tune may be a looping piece of background music or a sound effect; in either case, it’s a single thing that may use all four available channels (two pulse waves, a triangle wave, and a random noise channel). In this environment, I never have to worry about how to handle multiple overlapping tunes, but more importantly, I don’t have to worry about inserting data into the stream just ahead of the write cursor, which greatly simplifies the implementation. This is nice for prototyping behavior in the standalone tool, but these differences do need to be reconciled when bringing these changes into the game.

Let’s take a step back and review how audio buffers work. An audio buffer is a block of memory that contains raw waveform data. For one-shot sound effects or very short loops, the data can often be loaded in its entirety, thrown over to the audio device, and never touched again. For longer pieces that would be impractical to keep in memory all at once, we have to stream the data. In this case, we allocate an audio buffer of a fixed size and dynamically write and rewrite its data as the audio device is playing it. If our source is a large WAV file, we may be able to copy the data directly; if it’s in MP3 or Ogg Vorbis format, we’ll have to decode the data first before copying it into the buffer. In the case of Super Win and Gunmetal, the source is in a minimal MIDI-like event timeline format, and the waveform data must be synthesized in real time before being copied into the buffer.

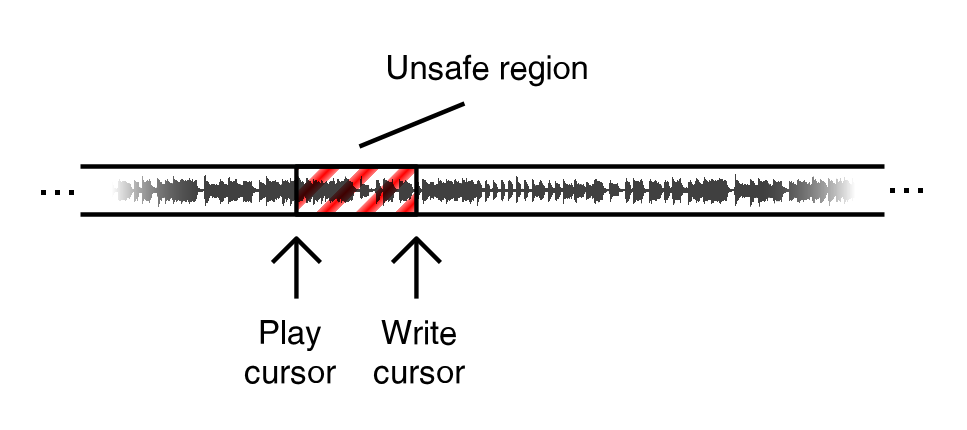

That’s not too bad. Each frame, we can query the audio device to find the cursor positions in the buffer. We get two cursor positions back: a play cursor and a write cursor. The play cursor indicates the point from which the audio device is currently reading and playing data. The write cursor will be a short distance ahead of the play cursor. This indicates the point at which we can safely write new data into the buffer. The region between the two cursors, which the audio device will be playing imminently, is considered unsafe for writing. Knowing these, we can write some portion of data starting from the write cursor and then sleep for a while, waking in time to continue writing before the device catches up to us.

For a single piece of background music, that’s pretty much the end of the story. Where this gets a little tricky is in dealing with sound effects that must play quickly in reaction to gameplay events. In order to minimize the perception of latency, we need to insert these into the stream as close to the play cursor as possible. As we know, the closest we can get to the play cursor is the point designated by write cursor, so we want to insert the new data there. We will almost certainly have already written data from another tune to this region, though, so we need to make sure to mix these together correctly and not obliterate existing data.

Where this gets really tricky is in applying realtime effects like reverb and filters to the entire mix. Because we may wish to insert new data into the stream in response to gameplay events, we can’t know for sure that a region of the buffer is completely finalized and won’t be changing again until the write cursor has passed it and it has entered the unsafe region between the play and write cursors. At this point, it will be too late to apply effects, as we can no longer alter that portion of the buffer. Instead, what I do is save off the state of each effect starting from wherever the write cursor was on the previous frame. From there, I can step forward through the data in the buffer, in the unsafe region, up to the current write cursor, and save off the state of the effect at that point. These saved states give me a “known-good” position from which I can advance the effect forward to the end of whatever data I’m writing. If I end up inserting new data into the stream before the write cursor has advanced, I can apply the effect again starting from the same point and trust that the output will be correct. Only once the write cursor has advanced and I know that the data is the unsafe region is final do I advance the saved state of the effect up to the new write cursor and begin the process again.

The exact nature of these saved states depends on the effect, but they generally involve saving off waveform data or something calculated from waveform data. For temporal effects like reverb, I maintain a series of ring buffers of decreasing sizes that can be used to produce a dense array of echoes. For first-order low-pass and high-pass filters, I only need to save off the input and output values for the previous waveform sample. (For Nth-order filters, the previous N values could be recorded instead.) For dynamic DC bias, I maintain a running average of the wave values for a window and shift the output by this mean to keep it roughly centered on the line.

The downside to this method is that I often end up synthesizing data or applying effects more than once, and those costs begin to add up. It’s possible in the future I may move away from realtime synthesis entirely in favor of converting these synth tunes into WAV, MP3, or Ogg Vorbis formats upfront. There are advantages and disadvantages to each option. Improving runtime performance is a very compelling argument for this conversion, as perf is becoming increasingly important as the synthesis grows more complex and CPU-intensive. On the other hand, the current implementation offers a drastically reduced file size of shipping binaries, which is nice not only for decreasing download times but also for minimizing the size of my source control repositories, as binaries generally can’t be diffed and must be stored in their entirety for even small changes.

With any luck, this should be the last devlog for a while that’s devoid of screenshots. I know I’ve been leaning hard on the walls of text recently, and now that this work is wrapping up, I should hopefully be able to get back to producing new content again. Stay tuned!