Monthly Archives: October 2015

10 Things I Learned In One Year Of Keeping A Devlog

This is my last week of regular updates before I’m off on paternity leave. I have a short recap video ready to go on Wednesday, but beyond that, I can’t guarantee what my schedule will look like. I already wrote a long blog about being one year into development on Gunmetal Arcadia and one year out from the launch of Super Win the Game, but since I’ll be taking a short break from documentation, I thought it would be interesting to talk a little bit more about the documentation process itself, and some of the things I’ve learned in the process. Plus I couldn’t resist the clickbait headline.

I really enjoy writing. I guess that shouldn’t be too surprising; I’ve maintained a blog in some form or another almost as long as I’ve been on the internet, but it had been a while since I’d kept a development-oriented blog that I updated on any sort of a regular basis, and I had some concerns that I might not be motivated to keep writing every work. As it’s turned out, I have more than enough material to pull from, and though the typical week’s update is usually an overview of the previous week’s worth of development tasks, I have the flexibility to cover anything at all related to development here, from deep dives into my engine, tools, or processes to tips for first time exhibitors.

And the writing itself is fun. That’s the important (and sometimes surprising) part. Sometimes I think I like writing even more than making games. It scratches a different creative itch, one that’s more immediate. There’s a thrill in publishing content on a regular basis that you just don’t get when you ship a game once a year (or two, or whenever). Launching a game is exciting, but it’s also stressful and the rewards are somewhat muted by comparison. Games, even free games, have a higher barrier to entry than a blog or a video, and you don’t get that thrill of knowing that your work is just a click away. Publishing a blog or a video is instant gratification.

Keeping a devlog forces me to schedule my time better. Very often, especially when I’ve had events and associated items to prepare and contract work encroaching on my schedule, I’ve had to honestly ask myself, “Am I going to have anything to write about this week?” This has become doubly critical since I launched the video series, as my goal has been to minimize overlap between the blog and video each week. So when I find myself having to ask whether or not I have at least two substantial new pieces of development, it reinforces just how precious my time is. I’ve had many weeks in which I dive into a task that I’ve been putting off simply because I know it will give me something to write about in a day or two.

In some cases, I haven’t had new development work to write about, but I’ve been able to turn to other sources, detailing bits of how my engine works, high-level designs for the future, and other things beyond a checklist of recent tasks. Sometimes this feels like a cop-out, and it hammers home the guilt of not having new development to discuss, but I also tend to think those can be fascinating topics, and I might not ever broach those were I not desperately in need of material.

Animated GIFs are the best. I started recording animated GIFs of my recent work back in March, and it’s caused a shift in how I think about new features. I find myself prioritizing tasks that can be conveyed visually, or looking for ways to convey elements that might otherwise be obscured. There was a recent article on Gamasutra built around a conversation between Rami Ismail and Adam Saltsman in which they discussed the benefits of developing games for spectators and how these same principles can benefit players as well. I’m finding the same in true of showing games in development; if an animated GIF can’t provide enough visual cues to communicate the experience, it’s highly probable the player won’t have enough feedback, either. Building for animated GIFs means building a better game in general.

My progress is more apparent to myself. Super Win the Game was developed in eight months, You Have to Win the Game in five. I’m now a year into Gunmetal Arcadia, and I don’t have a clear finish line in sight. And yes, some of that time has been spent on other things, and yes, it’s a big game, and yes, I’ve already made a lot of progress, but I still often find myself feeling like things are moving too slowly. That can be demoralizing. That’s where having a week-by-week chronicle of exactly what I was doing over the last year is nice. It’s easier to see how things are stacking up and easier to be proud of my accomplishments to date rather than critical of myself for not working faster.

I still can’t estimate tasks. I tried once, on David’s insistence, to estimate a projected release date for Gunmetal Arcadia by making a chart detailing all my tasks from the current day to launch, with time estimates for each. I had zero faith in it at the time, and that was before spending two months on free DLC for Super Win the Game and taking on contract work and launching a video series and having a kid. I bet it would look pretty silly now.

The point is, I can’t estimate tasks, and I won’t. And that in turn means I can’t gauge a release date. But I feel like I have a pretty good gut instinct for scope, and more importantly, as a solo developer, I’m in a position to be flexible and adapt to scope and schedule changes. It’s exactly that flexibility that led to the creation of Gunmetal Arcadia Zero. That put me on a path where it made sense to prioritize content production, and I feel like that’s turned out pretty well, as the last few weeks or months of enemy development has shown.

It feels like I’m trending in the right direction. I’ve probably talked about it before, but the primary reason I started this blog was in reaction to the lack of response I got to the announcement and launch of Super Win the Game. With that game, I tried to manufacture awareness with a handful of press releases every few months, and I failed. So with Gunmetal Arcadia, I made a decision to grow awareness more naturally with a constant stream of information. It feels like that’s working. Will that be The Thing that solves my low sales? I don’t know. There probably isn’t Just One Thing. But as I mentioned in my one-year postmortem, it’s not as critical that I have an Eldritch-scale hit as I once thought. It’s still too early to say for sure whether this is going to achieve my goals, but the trends are encouraging.

I wear more hats than ever. So many hats. Game developer, blogger, video producer, contract worker, occasional email replier-to, family man, not complete social recluse…that’s a lot of hats. I’m not always good at wearing that many hats. Drink every time I say “hats.”

It’s a weird combination of adrenaline and self-pity that fuels me when the weight of the hats starts crushing down, a bleary-eyed, caffeine-propelled fixation on overcoming the odds. I don’t know if that’s healthy. I mean, I know it’s not healthy. But it gets things done.

I still have further aspirations. My ambitions are limitless. The more I do in this vein, the more I want to do. I’m done a couple of dev streams and I’m currently dangling those as a carrot to fund my Patreon, but more recently, I’ve been doing some game streams just for fun, and I’m really enjoying those. In fact, I’m playing more games in general than I have in a long time. I’m finding myself itching to write or speak about these experiences from a perspective of fan and critic and designer. I’ve talked about this a few times now, and it’s hard to say if anything will come of it because it feels impractical for a number of reasons (too much on my plate already, too similar to what others are already doing, etc.), but it’s been stuck at the back of my mind for a while and I figured it was worth mentioning again.

This blog tends to be more recounting of personal experiences and less practical knowledge and education, but I’ve been wanting to branch out in that direction for some time. I mentioned my plans for a GDC talk recently, and as the deadline for the first draft is quickly approaching, I’ve been doing a little work on that this week. I’m excited about where it’s going, hopefully it’ll turn out to be a good resource for future indie developers.

I have no idea what I’m doing. Feedback is an interesting beast. You want it until you have it, and then you do your best to ignore it. I tend to rely on gut instinct most of the time, for better or for worse. There’s many other ways I could structure and push this blog. Should I be more high-level? More deep dives? Should I enable comments? Should I link old posts more often on social media? Should I blog more? Less? Change up my schedule? More art? More in-dev builds? More videos? More streams?

I know what sort of content I like to see on others’ blogs, and I try to aim for that, but it can be difficult to look at my own work with a critical eye. I’m not always sure my own perceptions or expectations align with everyone else’s. I can’t read my own work without knowing what went into both the development and the writing. I can’t read without knowing what comes next. Sometimes I’m not sure who I’m writing for. Gamers? Developers? Both? Someone else entirely?

This game is still exciting to me. Losing motivation is one of the more widely discussed aspects of solo game development, and I’m not going to pretend I don’t have days where I have to drag myself out of bed and force myself to work, but a year out from its inception,the promise of what Gunmetal Arcadia will be still gets my blood pumping. On more than one occasion, I’ve caught myself thinking, “Wouldn’t it be awesome if there were an NES game that player like Zelda II but with roguelike elements— oh yeah I’m making that game.”

But even beyond the core promise, the day-to-day implementation work is actually fun, and I think the devlog is a large part of that. Development can sometimes feel thankless, programming in particular, especially when it’s on systems that the average player would never observe. Knowing that I can write about those and detail exactly what goes into the apparently mundane aspects of development makes it a little more palatable.

This blog and the accompanying video series are also a much a wider outlet for communicating my ongoing development than the occasional tweet I did for Super Win, especially once you account for forums and such where I can repost the content. It’s sometimes hard to gauge exactly how many readers and viewers I’m reaching, but it for sure doesn’t feel like I’m just talking into the void, and that’s the important thing.

Anyway, on that note…

::Disappears into the night::

Episode 19: “Continuity”

Grab Bag 6

Having spent essentially my entire Thursday writing code, I felt like doing something completely different on Friday. I had a mental image of cartoony rendition of Vireo jumping and striking a slime, and I wanted to see whether I could capture it.

I started with a quick notepad doodle and went through a few iterations of scanning, printing a light image I could trace over, refining, and repeating. Once I was happy with the shapes, I scanned it one last time and traced over it with vector shapes. I made a few more changes to the shapes once it was in that form, replacing the pointy edges of the sword with rounded ones and shifting arms and legs around to look a little more natural.

The design and colors were based roughly on the painting I did earlier this year for the promotional flyers, with a few superficial differences. As I talked about in a video a couple weeks back, I don’t consider myself an artist, and part of that is that I don’t really do character concepts in advance, preferring to make things up as I go. So it’s nice to have a few (mostly consistent) references for my lead character now.

Having already been through the whole process of sketching, refining, converting to vector, and coloring for Vireo, the slime monster went substantially faster.

I don’t really know what I’ll do with these characters yet. I’m not happy enough with it to make it my cover art, probably, but I might paint in a backdrop at some point and maybe it can be a wallpaper for the Steam trading cards system or something.

I made some extensive changes to my synth tools back in June, but I hadn’t had much of an opportunity to put them to use until this week, when I finally wrote my first complete piece of music using these new voices. I’m super happy with how it turned out. Take a listen:

On a subjective level, I think this piece has a really strong hook, but even from a strictly technical perspective, there’s so much more going on here than what I was capable of doing when I wrote the music for Super Win the Game. I had no support for different instruments or voices in that game; I could set the duty cycle of the pulse waves per channel, but that was about the extent of it.

For Gunmetal Arcadia, I’ve leveled up my tools to allow more expression while still staying roughly within the limitations of the 2A03 sound chip. I’m still limited to the usual four channels, but I can author a variety of different instruments that may be played on each channel. (I’m ignoring the DPCM channel for now, but that may change at some point if I decide I really need some good drum samples.)

In total, there are seven different instruments in this piece. The lead voice was my attempt to recreate the sounds of Zelda II‘s temple theme. It is a pulse wave with a 12.5% duty cycle and some pronounced vibrato.

The descending toms are a fun one. These are played on the triangle wave channel and are created by doing a very quick pitch shift from a full octave above the target note. These are typically played over the same noise channel bursts that I use to approximate kick and snare sounds. Because these occupy the triangle wave, they are mutually exclusive with the bassline, but that didn’t really create any problems on this particular piece.

I experimented a little with the bassline in this tune, as well. The volume of the triangle wave can’t change, which limits its versatility compared to the pulse waves, but I tried to give each note a little bit of a punchier sound by again doing a quick pitch shift. This one is much faster than the tom’s, and it creates a sort of hard blat on the attack. It’s a sort of digital, synthy sound, not terribly natural, but it’s at least a little more interesting than a vanilla triangle.

The sort of creepy sounding swells in the first part of the tune (the “verse,” as I tend to think of these things are following a pop song verse-chorus-bridge structure) use pulse width modulation, dynamically altering the duty cycle of the pulse wave to create a sort of “swirling” sound.

Moving on to game work, I’ll start with a quick thing from a little over a week ago that didn’t make it into last week’s blog.

I have no plans to include the sort of fully submerged swimming bits like in Super Win the Game, but I did think it would be useful to have some swampy shallows like in Zelda II, something that can impede movement and create tension.

When I first set up the Gunmetal Arcadia codebase, I nuked all the swimming code from Super Win, so I ended up selectively bringing back little bits of that.

Super Win defined two different types of fluids: water and “toxic” (a catch-all for acid, lava, or anything else requiring an additional powerup to enter safely). I haven’t yet brought over the code related to toxic swimming, as I’m not sure whether this will apply to Gunmetal, and I’d almost certainly want to represent it differently if it did. I can imagine a scenario where I might want Metroid-like lava that inflicts damage over time. That feels like a more useful alternative to the instant death lava pits of Zelda II, as I already have a few instant death opportunities in Gunmetal.

I was hoping to create two types of flying enemies this week, as I tweeted in advance of the work, but ultimately I only had time to do the first. (Whenever I get around to it, the second will be something akin to the bats in Zelda II, something that hangs on the ceiling, only dropping to attack when the player gets near.)

For this one, tentatively called the “hoverfly” and using a placeholder asset that I previously used for my Medusa heads, I disabled physics (gravity) and decoupled horizontal and vertical movement. Vertical motion follows a sine wave, not unlike the Medusas, while horizontal motion is based on the distance in x-coordinates between the hoverfly and the player. It accelerates faster when it is far away, but its maximum velocity is capped to prevent it from reaching ludicrous speeds, and it is only allowed to turn around once it is a certain distance from the player, so it can never hover directly overhead. This forces it to strafe back and forth, and in conjunction with the bullets (fired only when it is facing the player), this feels like a good enemy that can create tension when used in conjunction with other, more immediate threats.

This was my first case of aiming shots at the player that aren’t affected by gravity. I previously discussed the case of shots that are affected by gravity in an earlier blog, and I mentioned this case in passing. It’s a simpler problem, but one that I hadn’t yet had cause to implement.

I already have ladders for facilitating vertical movement (and have had them for nearly the entire development of this game, going back to last December), but there have been a couple instances in my test maps where I’ve really wanted elevators for one reason or another.

Fundamentally, this didn’t seem like a difficult problem. I already had moving platforms in Super Win; the big difference here would be that I’d need to alter their movement based on the player’s posture: crouch to move down, look up to move up.

Where this got a little trickier is in how my collision system handles collision response.

When you take an elevator up, I have an invisible blocker sitting at the top of the shaft that only the elevator itself can collide against. It hits this thing and stops and the player disembarks. The problem I was encountering was that, even though the elevator would appear to be flush with the adjacent floor, the player would get stuck on the corner and would have to jump to leave the elevator.

I knew exactly why this was happening as soon as I saw it. My collision system defines a small value as the thickness of all collidable surfaces. (To provide a sense of scale, this is 0.015 pixels in Gunmetal Arcadia.) In practice, this means that in normal cases, colliders should always stop 0.015 pixels away from each other, but they are able to correctly handle colliding anywhere within this buffer space. Once they are actually physically intersecting each other, no collision result will be produced, and the objects will phase through each other. This helps to account for floating point imprecision, but in this case, it was also creating a bug. The elevator would stop 0.015 pixels below the adjacent floor, and the player would catch on that tiny edge.

My fix for this ended up being a bit of a one-off hack, but given how unlikely it is to encounter another bug of this sort, I didn’t feel like a more general solution would have been appropriate. The elevator gets a callback when it collides with something, and in response, it rounds its own position to the nearest integer (which in this case also means the nearest pixel). This makes it flush with the adjacent floor so the player can walk safely between the two. It also puts it flush with the invisible collider, as close as it can possibly be without phasing through.

Since I’m dealing with axis-aligned (and frequently integer-aligned) bounding boxes, some of these systems are perhaps a little aggressive for what this game needs, but it’s never been my goal to support 2D platformer games exclusively, so it’s important to build with other use cases in mind.

Finally, I checked off a longstanding TODO and brought over some screen shake stuff from Super Win. My biggest use case was for bombs, but I’ll probably also use this for boss death effects and maybe one or two other things. I’m not really a huge fan of the proliferation of overblown screen shake among indie games recently (yes, juice it or lose it, but maybe show a little restraint?), so I’ve tried to keep this fairly quick and conservative. It’s a little difficult to tell in the animated GIF below, but the shake is also quantized in time, only updating every two or three frames. This gives it an interesting sort of “chunky” feel that, to my eye, is a little more authentic to NES games and feels more stable than wild rubberbandy screen shake.

A couple last things. I’m planning to take some time off for paternity leave starting in another week or two. I may still be working on the game when I’m able (I mean, that’s kind of what I do for fun), but I can’t make any guarantees as to what my blogging and video production schedule will look like. If nothing else, my Twitter should stay active.

Sometime in the extremely near future, I’m also going to be putting together a first draft of materials for a GDC talk next year, so I may devote a video log or two to dry runs of this material to get comfortable with it and solicit feedback. Stay tuned!

Episode 18: “Routine”

Control Binding

It’s been a while since I’ve done a deep dive into some aspect of my engine, so I thought I’d talk a little bit about how control binding works in my engine, some of the concessions I’ve made to support various gameplay requirements, and some general thoughts on input handling best practices.

If I go back to the earliest origins of input handling in my engine, it began with polling a DirectInput device for complete mouse and keyboard state each frame. By comparing the current state to a cached state from the previous frame, I could catch key-up and key-down events in addition to knowing whether a key were pressed (or a mouse button, or whether a mouse axis were moving). This pattern remains the basis for all my input code: poll, compare values against the previous frame’s, and react.

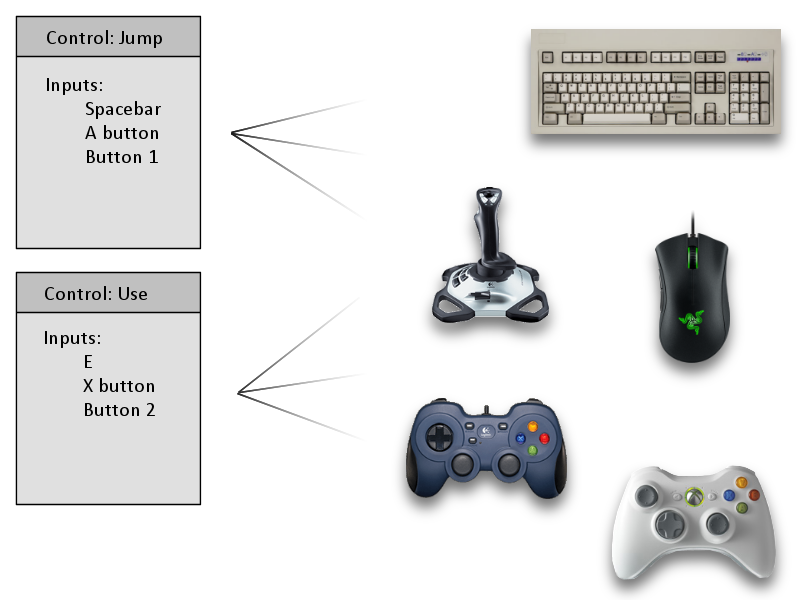

My earliest games and game demos had hardcoded controls simply because I had not taken the time to develop a complete control binding system, but it was always a goal of mine. As I continued developing my engine, it eventually became a topmost priority. The earliest manifestations of this system appeared in August 2008, as I was nearing completion on Arc Aether Anomalies. At the time, I had only gotten as far as abstracting device-specific inputs (keys, buttons, and axes) from high-level game-facing controls (move, shoot, etc.). The specific inputs were still hardcoded, but after an initial setup pass, they were safely abstracted away and never had to be referenced directly again. Instead of asking, “Is the player clicking the left mouse button and/or pressing the right trigger?” I could ask, “Is the player pressing the fire button?” and it would mean the same thing. This simplified my game code by allowing me to test a single value automatically aggregated from any number of sources under the hood, but it would be another two years before this would become a user-facing system that could support arbitrary control bindings defined by the player.

So let’s talk implementation. My engine defines an “input” as any single element of a device which can be polled to produce a value in the range [0, 1]. This may be keys on a keyboard, mouse buttons and axes, gamepad buttons and triggers, analog stick and joystick axes, wheels, sliders, directional pads and POV hats, whatever. The polling methods and return values for each of these vary somewhat from API to API, so my first line of attack in normalizing this data is to bring everything into the [0, 1] range. Sometimes this is trivial; keys are either up (0) or down (1). Most devices report axes independently, so for instance, analog stick axes on gamepads are reported as separate X and Y values in some range, often as signed shorts [-32768, +32767]. This can be scaled down into the [-1, +1] range and then separated into positive and negative axes each in [0, 1]. An exception to this is POV hats (which also usually includes directional pads); these are reported in terms of hundredths of a degree clockwise from the top, or the special value 0xFFFF (32767) to indicate no movement. (As a personal aside, if anyone’s ever encountered a POV hat that took advantage of this precision, I’d be fascinated to learn more. I’ve never seen one that didn’t clamp to eight-way directions.)

My engine defines a “control” as a list of inputs with a name indicating its purpose in the game (“walk left,” “look up,” “jump,” “attack,” and so on). Controls may also optionally specify rules regarding non-linear scaling and acceleration over time, which is desirable for certain cases like camera rotation in a first-person game. The state of a control may be queried, and the result will be the sum of all its inputs, clamped to [0, 1], with these additional rules applied.

The systems described above were sufficient for shipping Arc Aether Anomalies, but since then, I’ve added support for user-defined control bindings. As you could probably guess, this involves changing the inputs associated with a control at runtime based on player input. This is simple in principle, but there are a number of tricky issues that crop up when putting it into practice.

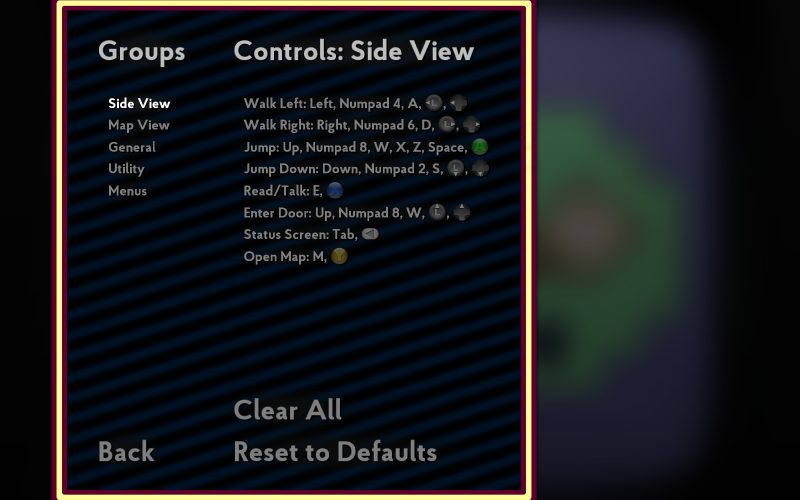

One of the first “gotchas” I encountered in implementing this was dealing with making input bindings unique. That is to say, if the left mouse button is currently bound to “shoot” and I attempt to bind it to “use,” it should be removed from the “shoot” control. This raises a number of questions about the nature of how controls should or should not overlap. For instance, let’s assume I am using this same control binding system for driving input to menus. It would not be reasonable to assume that the left mouse button could only be associated with shooting in the game or activating buttons in the menu but not both. To address this, I introduced the notion of “control groups.” Each group deals with a different mode or context, and this is transparently displayed within the control binding menus, as seen in this screenshot from Super Win the Game.

Each input is considered unique only within the context of the current group. Assigning the left mouse button to a control in the “gameplay” group has no effect on its bindings in the “menu” group.

Unfortunately, even this solution has proven to be less than effective as the numbers of actions the player may take has grown with each game I’ve made. In the original You Have to Win the Game, the player’s actions were limited to walking, jumping, and jumping down through shallow platforms. There was essentially no potential for overlap or conflict here. In Super Win, I added some new abilities, including entering doors. The natural default for this control was up: the up arrow key on the keyboard (or W), up on the d-pad, up on the analog stick, whatever device the player might be using, up. This created a conflict in that I was also already using the up arrow key for jumping when playing with the keyboard. Sure, I could have changed that one to Z, X, C, or the spacebar, but it felt wrong to not support both at once. To solve this, I added another system wherein specific controls within the same group can optionally allow the same input to be bound to each. In this way, the up arrow key can be both “jump” and “enter doors” in Super Win.

I’m not totally happy with that solution. It’s an odd one-off backpedaling of another system’s effects, and it’s not made clear to the end user in any way other than trial and error. It would be nice if I could scope my controls better such that these cases simply could not occur, but this doesn’t feel like a viable solution either. Consider the case described above; if “jump” and “enter doors” were merged into a single control, then the A button on the gamepad would activate doors by default, almost certainly never the player’s intent. On the bright side, the fact that this workaround is implemented at the control level rather than the input level means that it’s at least somewhat easy to figure out where these conflicts might arise and suppress them.

I have a whole bunch of input-related notes remaining and no good way to tie them into a larger narrative, so I’m just gonna rapid fire some thoughts here.

Mouse input is an oddity. Unlike most other devices, the values it reports when polled effectively have a delta time premultiplied into them and are unbounded. An analog stick may only be pushed as far as its physical bounds will allow, but a mouse may be pushed arbitrarily far in a single frame. This breaks some of the assumptions I make regarding the [0, 1] range of inputs and requires some workarounds of its own. While a control is actively receiving mouse inputs, it does not do any acceleration or non-linear scaling. This has the desired result on both fronts. Our input value may be well outside the [0, 1] range, which our acceleration and non-linear scaling rules are not equipped to handle, and from the player’s perspective, mouse input should not be subject to these rules anyway, as they could create unnatural, non-1:1 interpretations of the mouse’s movement.

Two-dimensional inputs such as analog sticks require a little bit of special attention. As I mentioned above, I decompose these inputs not only into the separate X and Y axes as they are typically reported by the API, but also into separate positive and negative regions. As I’ve noted, this is advantageous because it allows me to treat all input as being within the [0, 1] range, although it is perhaps a little odd from the user experience, when all four points of the compass have to be bound separately. (Counterpoint: this is arguably the ultimate expression of Y axis inversion.) But regardless of how the axes are represented under the hood, it’s important to recognize that the input exists as a single physical thing in meatspace the real world and handle it accordingly, specifically with regard to dead zones and non-linear scaling.

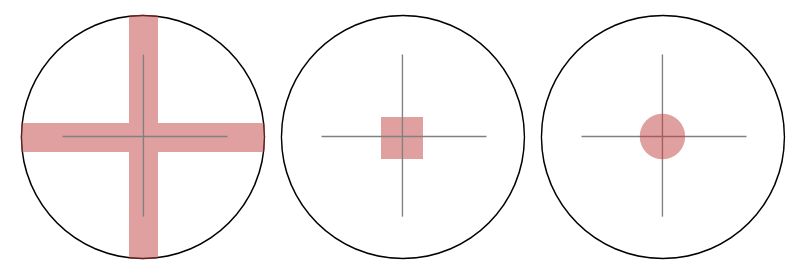

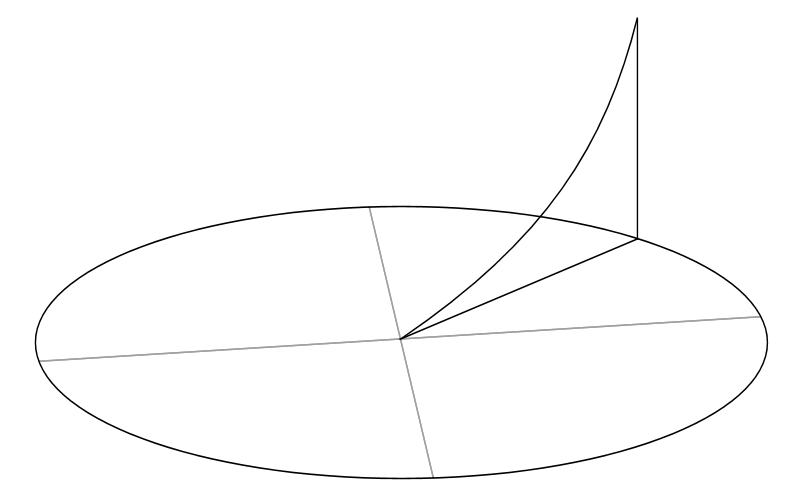

If you were to draw a picture, what would the dead zones on an analog stick look like? There’s no wrong answer, but it’s important to consider every option so you can make an informed decision. Perhaps the easiest sort of dead zone to implement is a cross- or plus-shaped one. This can be done by clamping the input from each axis separately. There are times this may be desirable, for instance if you want to suppress errant side-to-side motion while holding up/forward in a first-person game. But I tend to prefer circular dead zones, and to accomplish that, we must look at both axes together. We can represent the axes as a two-dimensional vector and then apply dead zone clamping to its magnitude. Then we can decompose the vector into separate X and Y elements and output the result of each.

I tend to be fairly aggressive with my dead zones, on account of frustrations I’ve had in the past in which movement persisted even when the stick were released (because it was physically sticking a little bit to one side) and when I could not reach full speed pushing in certain directions. The latter case is where outer dead zones are important. I model both inner and outer dead zones as circles, clamping the magnitude of the 2D vector to within this range and scaling to [0, 1].

Non-linear scaling is an interesting one if only because so many games get it wrong — and I’m not talking about more easily forgiven hobbyist and indie games, I’m talking huge multi-million AAA franchise games. A surprising number of high-profile titles have made the mistake of applying non-linear scaling to each axis independently. It’s perhaps a minor quibble in the big picture, but it’s a crucial part of maintaining a fluid, natural game feel, and I hope by now I’ve made it clear just how important game feel is to me.

So once again, the solution is to combine your X and Y axes into a single 2D vector, apply non-linear scaling to its magnitude as a whole rather than its separate X and Y values alone, then decompose it into its components and return the one you need.

To illustrate why this is important (and possibly ruin a number of otherwise great first-person shooters for you once you notice it), consider the case of pushing the analog stick 45 degrees up and to the right. Assuming a circular gate and perfectly accurate hardware, this should give us X and Y values of 0.707 each (which is ½√2 because the Pythagorean theorem blah blah). I’m ignoring sign here; it’s possible that up on the stick would be negative Y but whatever. Let’s say then that we’re doing some non-linear scaling with a power of two. If we did this on each axis separately, we’d get results of 0.5 for both the X and Y axes. This gives us a resulting magnitude of only 0.707. If we used this method, we’d only be getting 70% of the intended input (70% movement, 70% rotation, etc.) despite the fact that the stick is pushed as far as it’s physically able! If instead, we formed a 2D vector first, its magnitude would be 1.0. Then non-linear scaling with a power of two would have no effect on it, and the resulting magnitude would still be 1.0, correctly representing the player’s intent.

There’s more details I could talk about with regard to my input system, from dealing with a multitude of APIs across each platform, to interpreting manufacturer and device GUIDs to make an educated guess as to how to display button prompts, to ideas for the future to move input to a separate thread that can run at full speed regardless of the game’s state. But this is the nuts and bolts of my control binding system specifically, and I’m pretty happy with how it’s turned out, and hopefully it’s beneficial to players to have the flexibility to define their own control schemes.

Episode 17: “Redshift”

Grab Bag 5

It’s been another week of various gameplay tasks, bug fixes, and editor work. I don’t have a strong thematic hook to tie all these bits together this week, so…it’s time for another Grab Bag!

I’m getting close to wrapping development on the biped enemy. I still need to modify his behavior when he starts pursuing the player, to increase foot speed and disregard the edges of platforms, but otherwise, I’ve knocked out a lot of the big issues here. When this guy initially spawns and is unaware of the player, he’ll patrol back and forth, as I showed in last week’s video. More recently, I’ve added a line-of-sight test to the player character. Once he has eyes on the player, the biped will begin pursuing, close to melee distance, and attack. I’m pretty happy with how this feels already, but I’ll probably want to continue tuning it to strike the right balance between fairness and difficulty. It doesn’t feel right when the enemy can immediately recover from damage and knockback and return fire, but combat becomes trivially easy if enemies can be caught in a loop of taking damage and being stunned. I don’t yet have a good solution for that one, but I’ll be taking a look at similar games soon to see how this sort of thing is typically handled.

I’ve been tackling a few bugs recently. One I tweeted about earlier this week after finally discovering a reliable repro.

I was immediately obvious what was happening once I discovered this repro, but this bug had been lingering for a week or two in that “seen once, beginning to doubt my own eyes” state. The issue here stems from reusing technology originally built for keeping the player character attached to moving platforms to attach weapons to their owners’ hands. In the event that a viable attachment base (a moving platform, or, in this case, the player character) sweeps upward into an entity that can be attached to others, it parents itself to that thing, no questions asked.

This could also happen when the biped enemy jumped into the player’s sword, and I had seen this happen once or twice during normal gameplay testing as well.

The quick fix was to disallow entities from changing bases if they’re already hard-attached to something else. As I mentioned in a previous video, a “hard attachment” is something which is treated as an extension of the thing it’s attached to, rigidly moving where its parent moves with no regard for collision. By comparison, when the player stands on a moving platform, they are “soft-attached” and will try to move with the platform, but will stop if they collide with a wall.

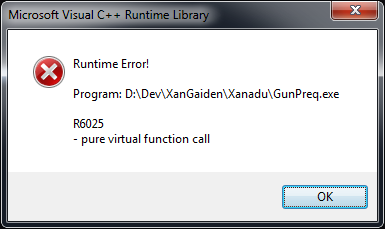

I had encountered another bug a few times in the last couple of weeks that had me stumped. Infrequently, during normal gameplay, I would get a crash with this message:

I had run across this one a few times while testing from the editor (i.e., running a release build with no debugger attached), but I hadn’t been able to get a repro in a debug build, nor could I find any reliable repro steps.

Eventually, I did hit this while debugging. It was a memory access violation, incurred when attempting to do an initial zero-time tick on an entity that had been created in the previous frame but which had been immediately destroyed by some other force.

That information was sufficient to theorize what was going on, and from there, I was able to narrow down a repro. What was happening (and this explains why the bug was so rare and also why it was difficult to test even once I understood the problem) was that, if the player swung their sword at exactly the same moment they took damage, the game would crash. When the player performs a melee attack, the weapon entity is created in response to playing an animation sequence that wants to spawn an attachment. As such, the sword entity is spawned in the middle of doing normal game loop delta-time entity ticking. Entities spawned mid-tick are held in a list to perform an initial zero-time tick once all other entities have been ticked, in order to ensure they are set up properly. So a pointer to the newly created sword entity is sitting in a list somewhere, and then, as we continue ticking entities this frame, the player takes damage. In response to taking damage, we switch to a hurt/stunned animation sequence, one that does not have an attachment point for a melee weapon. This destroys the sword attachment we just created, but the pointer we had saved off earlier remains. At the end of the tick, we go to do a zero-time tick on it and crash reading bad memory.

In many cases, I can avoid these sorts of issues by using handles instead of pointers, as a handle to a destroyed entity would be correctly recognized as invalid and not dereferenced. In this case, however, I’m dealing with pointers to “tickables,” an abstract class that exists outside of my entity/component hierarchy and is therefore unable to be referenced by a handle. (I should stress this limitation is specific to my engine architecture and could be considered motivation for a more generic refactoring of handles, but I’m not quite there yet.)

One of my development machines is a laptop I purchased in early 2011 for doing some networked game testing. It’s become my primary Linux dev machine by necessity, so I find myself testing my games on it fairly regularly. This laptop has a GeForce G210M video card and is capable of running some 3D games fairly well, so in theory, a 2D game should present no problem. What I’ve found, however, is that more so than any of my other test machines, this laptop gets very pixel-bound very quickly. This has become a problem over time as I’ve continued adding fullscreen passes here and there throughout my game framework to support features like gamma adjustment or blurring out the game scene underneath the menus.

Recently, it occurred to me that if I could bypass some of these default features, I could support an optional high-performance mode. This mode would exclude any CRT simulation rendering, but it would also avoid as many fullscreen draws as possible. It would prioritize speed over visual quality. My goal was to get as close as possible to rendering the game scene directly to the backbuffer. Since I’m already rendering the game scene to a pixel-perfect 256×240 render target texure, I chose not to alter that path, but once the scene has been composed, I render a single fullscreen quad to the backbuffer, and that’s it. Under these conditions, I can maintain a constant 60 frames per second while running in fullscreen on my laptop, so I’m pretty happy with that. I haven’t yet added this option to the menu, so it’s not really a fully supported feature yet, but it can be enabled from the console or config file, and at the very least, I plan to ship that implementation.

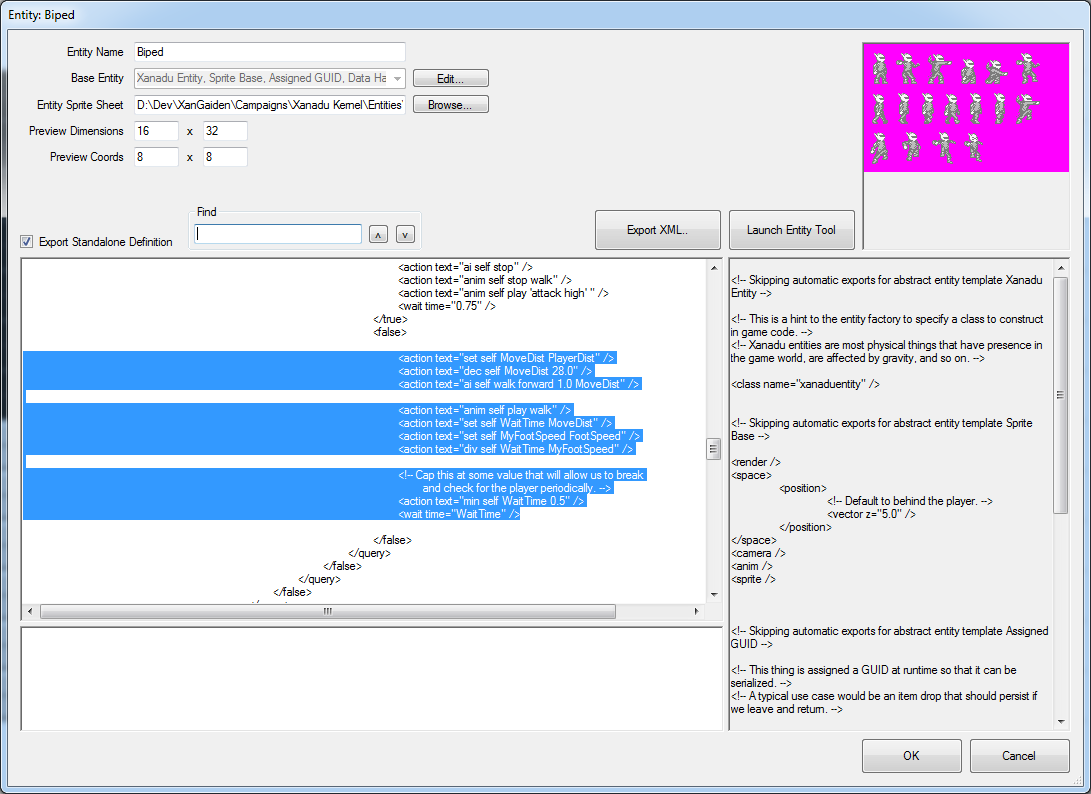

I did a little bit of editor work a few days ago in the interest of providing myself with a more comfortable and familiar environment for writing game scripting markup. As I’ve mentioned in a couple recent videos, much of my gameplay — enemy AI, in particular — is authored with XML markup in an editor window. This isn’t necessarily the best environment, and I’ve talked in the past about how large, wordy blocks of markup are usually a good indication that a feature needs to be broken out into a separate visual interface, as I’ve done in the past with animation and collision. However, it seems unlikely that I’ll move entirely away from writing markup by hand anytime soon, certainly not in time to ship the Gunmetal Arcadia titles, so I figured it would be worth my time to make some improvements to the interface as long as I’m going to continue using it for the foreseeable future.

I’ve been using a plain vanilla C# / .NET RichTextBox control for authoring markup within the editor. (For external definitions, I use Notepad++, which handles XML nicely, highlighting paired tags and so on.) RichTextBoxes are a decent place to start, but they don’t do everything exactly as I’d like. In particular, when I’m dealing with blocks of markup, the ability to select multiple lines and tab or shift-tab to indent and unindent is critical. RichTextBoxes don’t do this out of the box; by default, selecting a region and hitting Tab will overwrite the selected text with a single tab. I wound up implementing this myself, checking for lines in the selected region and inserting or removing tabs at the start of each line as appropriate. I also handle the case of hitting Shift+Tab when no text is selected to unindent the current line if able.

The next feature I wanted was Ctrl+F and F3 (and Shift+F3) to search for text in the markup window. As it turns out, C# makes this remarkably simple, thanks to a pair of string searching functions IndexOf() and LastIndexOf(). Between the two of these, it’s easy to look forward and backward from the cursor position to find the previous or next instance of the search phrase. Currently, I don’t support the usual “match case” or “whole word only” criteria, but it’s conceivable I might want these at some point in the future.

Finally, in the interest of legibility (as some of my AI markup has grown to as many as fifteen nested tags in places), I’ve reduced the size of tabs from their default 48 pixels to 25, allowing deeply nested tags to remain more visible.

I’ve been reevaluating my schedule in light of recent Real Life Events encroaching on my work hours. I haven’t committed to any changes yet, but I’m considering shifting videos from Wednesday to Friday. We’ll see. I’ll probably be taking some time off from my regular blogging and video production schedule for paternity leave in early November or thereabouts. What exactly that will look like and how long I’ll be away are still up in the air.